- Biweekly Data & Analytics Digest

- Posts

- AI Progress & Power Players Shaping Tomorrow

AI Progress & Power Players Shaping Tomorrow

Biweekly Data & Analytics Digest: Cliffside Chronicle

Noteworthy 1: State of AI Report 2024

I look forward to this report every year. It’s thorough yet easy to read. The State of AI Report 2024 is authored by AI investor Nathan Benaich and Air Street Capital. It offers a comprehensive analysis of the latest developments in artificial intelligence and data. I also appreciate that they review the accuracy of their predictions from each previous year.

Here are some key takeaways:

Convergence in AI Model Performance: The performance gap between OpenAI’s GPT-4 and other models is narrowing, indicating a leveling field in AI capabilities.

AI’s Expansion Beyond Language: Foundation models are extending their applications into fields like mathematics, biology, genomics, physical sciences, and neuroscience.

Revenue Generation by AI Companies: Some AI firms, particularly those in video and audio generation, are beginning to generate significant revenue, though questions about long-term sustainability remain.

Emergence of Pseudo-Acquisitions: Certain AI companies are opting for pseudo-acquisitions as an exit strategy due to challenges in maintaining a viable business model.

_____

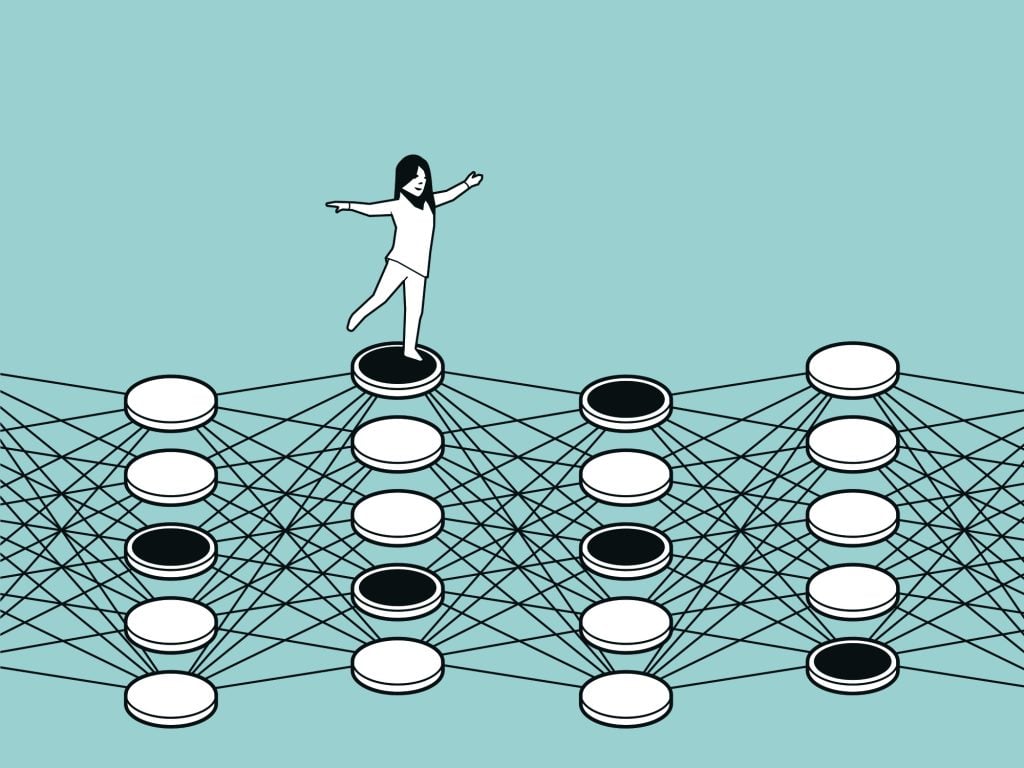

Noteworthy 2: The Nobel Prize is Awarded to the Godfathers of Machine Learning

Geoffrey Hinton and John Hopfield are the “Godfathers of Deep Learning.” This is a significant deal in that it acknowledges the multi-functional impact that machine learning has on so many foundation areas of research and discovery. They have led a team of researchers pushing forward neural network discoveries for over 40 years. They were awarded the Nobel Prize in Physics for their groundbreaking work in machine learning and neural networks. Their seminal contributions, including Hopfield Networks (1982) and Boltzmann Machines (1985), have laid the foundation for modern machine learning. Hinton’s development of the Boltzmann machine, building upon Hopfield’s network, has been instrumental in advancing AI’s ability to recognize patterns and generate new data examples.

Noteworthy 3: dbt Announces Product Vision at Coalesce 2024

At the recent Coalesce 2024 conference, dbt Labs introduced several significant updates to dbt Cloud, aimed at enhancing data collaboration and efficiency:

• Cross-Platform dbt Mesh: This feature enables practitioners to reference and reuse data assets across different projects and data platforms, effectively breaking down silos and fostering organization-wide collaboration.

• dbt Copilot: An embedded AI engine designed to accelerate and automate analytics workflows within dbt Cloud, currently in beta.

• Advanced Continuous Integration (CI): Enhanced CI jobs now provide detailed breakdowns of columns and rows being added, modified, or removed in the underlying data platform as a result of executing dbt jobs.

These enhancements reflect dbt Labs’ commitment to providing robust tools that empower data teams to build, deploy, monitor, and discover data assets more efficiently.

Noteworthy 4: Databricks and Amazon Collaborate to Reduce AI Costs

Databricks has entered a five-year partnership with Amazon to utilize Amazon’s Trainium AI chips, aiming to reduce AI development costs by up to 40% compared to traditional GPUs. This collaboration enables businesses to build and customize AI models more cost-effectively, leveraging Databricks’ Mosaic AI on AWS. This is interesting to watch because this is the most significant release of a competitive Nvidia chip since the rise of LLMs. Between competition, design efficiencies, and reduced compute costs, LLM usage has been not only the fastest to be adopted by consumers but also the fastest technology to drop in price.

Epoch AI’s recent analysis estimates the distribution of H100-equivalent GPUs among major tech companies, revealing notable industry trends. Google leads in total compute capacity due to its extensive use of Tensor Processing Units (TPUs). However, since TPUs serve various applications beyond large language models (LLMs), the exact portion dedicated to training the largest AI models remains uncertain. Interestingly, the allocation of GPUs among major cloud providers inversely mirrors their market share. Amazon Web Services (AWS), the cloud market leader, possesses the fewest GPUs, while Google Cloud, the smallest among the top providers, holds the largest number of TPUs/GPUs. These insights underscore the diverse strategies companies employ in building AI infrastructure and the varying availability of computational resources across the industry.

Read the full article here: (The Wall Street Journal)

Noteworthy 5: Snowflake vs Databricks…by the numbers

In a recent analysis, Robert Dale Thompson evaluates Databricks and Snowflake, two leading data platforms, focusing on cost, performance, and data accessibility.

Thompson’s testing involved processing a 1.57 billion-row dataset stored in Azure Data Lake Storage. The results indicated that Databricks provided more cost-effective and efficient data processing capabilities compared to Snowflake, particularly when handling large-scale datasets.

_____

A funny thought: