- Biweekly Data & Analytics Digest

- Posts

- Databricks Doubles Down on Product Analytics, Repos Are Breaking Themselves, and the New Velocity-First Mindset in AI Engineering

Databricks Doubles Down on Product Analytics, Repos Are Breaking Themselves, and the New Velocity-First Mindset in AI Engineering

Biweekly Data & Analytics Digest: Cliffside Chronicle

AI-Generated Code Is Breaking Your Repo, But You Can Fix It

Copilots and ChatGPT are now producing significant portions of enterprise codebases, yet much of that code bypasses traditional checks. The article outlines four major risks: dependency bloat, license contamination, insecure logic, and unreviewed merges. In short, AI-generated code behaves like an unmanaged third-party dependency (fast to adopt, slow to secure). Organizations need to build structured review and provenance pipelines, or their repos will accumulate invisible technical debt that’s harder to debug than any human error.

AI-assisted SQL, dbt, and Spark code often “works” while also rewriting data logic, drops constraints, or alters joins without notice. The same pattern repeats in app development. Banning copilots isn’t the answer. Teams need guardrails that treat LLM output as untrusted input: AI-aware linting, metadata tagging, and automated testing built into CI/CD.

The next evolution in developer productivity will be smarter governance around AI.

Shadow AI Is Putting Your Software Teams at Risk

AI is being used across engineering orgs without oversight, compliance, logging, or security review. Shadow AI mirrors the early days of shadow IT, when unsanctioned SaaS tools spread faster than governance could catch up. The article cites new data showing over half of teams are already using AI tools unofficially, leaving organizations exposed to data leakage, IP risks, and inconsistent quality standards.

The same story repeats in data and analytics teams: a well-intentioned engineer connects ChatGPT to production data, or a BI analyst runs sensitive customer fields through an AI enrichment API. When AI accelerates delivery, governance feels like friction. When you ignore it, you don’t notice until something breaks in production.

The real opportunity is to operationalize AI governance without killing the momentum. In short, make it easy to do the right thing fast.

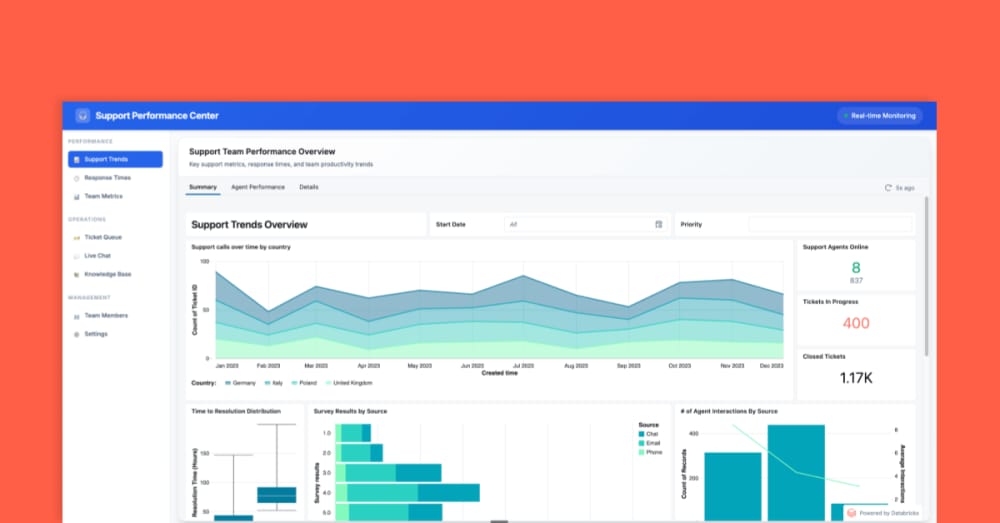

How to Embed Databricks AI/BI Dashboards Directly Into Your Products

Databricks’ new guide details how to embed AI/BI dashboards directly into customer-facing applications, turning internal analytics into a monetizable product feature. The post walks through implementation using service principals, row-level security for tenant isolation, and Databricks’ REST API to generate secure, shareable embeds. By pairing Databricks SQL with the new AI/BI workspace and Unity Catalog controls, teams can safely expose insights without compromising governance or cost efficiency.

This move blurs the line between data platform and application backend, letting developers ship analytics that live with the product, not next to it. It’s especially relevant for SaaS platforms that want to offer dynamic, AI-driven insights without spinning up separate dashboards in Power BI or Tableau.

When your warehouse, governance, and visualization layers live in one stack, you can deliver analytics as a service in days, not months.

Rethinking DSQL: Why AWS Is Collapsing the Layers Between Databases and Applications

DSQL aims to eliminate the traditional boundaries between the database and the coordination layer, letting queries run with low-latency, distributed execution while maintaining strong consistency guarantees. It’s a rethink of database systems for cloud-native workloads: fewer moving parts, asynchronous index creation, and a bias toward durability over exotic performance tricks. The piece reads like a blueprint for how AWS wants to simplify distributed data architectures without sacrificing scale or correctness.

There has been the same challenges in analytics stacks: too many layers, too much orchestration overhead, and too little clarity about where consistency actually lives. DSQL’s design suggests a future where query engines, storage, and metadata services collapse into a unified control plane (something Snowflake and Databricks are also inching toward).

AWS is betting on coherence, even if it means giving up some modularity.

Teaching AI Vision to Understand, Not Just Recognize

DeepMind’s new research explores how to make AI vision systems perceive the world less like pattern-matchers and more like humans. Instead of relying solely on pixel-level correlations, their model learns from naturalistic videos that capture physical interactions (how light, motion, and causality co-occur in the real world). The result is a representation that’s more robust to distribution shifts and adversarial noise, performing better on reasoning and generalization tasks. This marks a meaningful step beyond classic vision benchmarks like ImageNet, moving toward models that understand why objects behave the way they do, not just what they look like.

This is the next frontier for applied AI: perception that generalizes. Data teams have already learned the cost of models that overfit to narrow patterns. Vision is no different. The shift from static training sets to dynamic, physics-grounded learning mirrors what’s happening in analytics: context-rich data beats curated samples every time.

As models start to “see” like us, the conversation shifts from classification to comprehension.

Why Top AI Teams Are Shipping Fast and Forgetting About Cost, For Now

Elite AI engineering teams are prioritizing velocity over optimization. The article argues that in the current AI arms race, speed to deployment trumps efficiency. Teams at leading firms are burning through GPU budgets and compute quotas to iterate models faster, embracing hybrid infrastructure, loose MLOps guardrails, and minimal refactoring. The logic being that optimizing too early kills learning. With models and frameworks evolving monthly, premature cost discipline can leave teams behind.

The pattern is familiar: productionize first, optimize second. It’s easy to scoff at runaway cloud bills, especially for mid-market teams trying to build AI capability before their tooling ossifies. The smartest orgs are starting to define “bounded chaos”: ship fast, but track lineage, log experiments, and design for cost visibility later.

Efficiency is something you retrofit after you’ve shipped, scaled, and learned what actually matters.

Blog Spotlight: Breaking Down Data Silos: How AI Integration Transforms Business Intelligence

This post makes a compelling case for why modern BI has gone beyond dashboards and evolved to unified intelligence. AI-driven data integration is helping organizations connect fragmented systems, automate cleaning and enrichment, and surface insights that used to get buried across tools. By bringing machine learning into the integration layer, companies can finally move from reactive reporting to predictive decision-making. Breaking silos is becoming the foundation for making analytics truly intelligent.

“Data will talk to you if you’re willing to listen.”