- Biweekly Data & Analytics Digest

- Posts

- AI Agents Are Growing Up, Your BI Stack Is Getting Smarter, and Delivery Still Depends on People

AI Agents Are Growing Up, Your BI Stack Is Getting Smarter, and Delivery Still Depends on People

Biweekly Data & Analytics Digest: Cliffside Chronicle

Agentic BI: Where AI Agents, Data, and Semantics Finally Work Together

Most BI systems are still fragmented: data lives in one layer, logic in another, and business semantics are buried in tribal knowledge or hardcoded logic. Agentic BI solves this by unifying infrastructure, data, and semantics into one composable system. With tools like Unity Catalog, Delta Lake, and Mosaic AI, Databricks positions itself as the platform where data agents can understand and act on it the data it accesses.

It’s easy to get stuck translating metrics between tools, teams, and layers of legacy code, and no amount of dashboards fixes that. Agentic BI reframes the problem to expand simple visualization to actionable insight delivery, powered by systems that understand business logic. It’s a stack-level shift toward a future where agents deliver insight autonomously because the system has been built to support context instead of queries.

BI is evolving fast, and Agentic BI might be the next real unlock.

AI + Agile Lets Developers Focus on Work That Matters

The integration of AI into Agile workflows is changing the developer experience by removing the noise. AI tools are helping teams automate documentation, surface relevant context, and generate boilerplate code, which allows engineers to focus more on system design, architecture, and product craft. Agile + AI can shift developer time from process maintenance to problem-solving, enabling a tighter feedback loop between user value and engineering output.

Agile implementations end up bloated, full of rituals that no longer serve delivery. Teams can be slowed down by the very processes meant to accelerate them. AI is speeding up coding, and it’s also helping developers reclaim focus. When AI augments the low-leverage tasks, engineers get to spend more time on the high-leverage ones: understanding edge cases, improving performance, and collaborating with product teams on real outcomes.

AI might be the tool that brings your Agile process back to life. Let the machines handle the grunt work.

From Chatbots to Strategy: Turning Multi-Agent AI into Business Leverage

Multi-agent AI systems can drive real business value. Rather than relying on a single, monolithic LLM, multi-agent architectures distribute tasks and plan, collaborate, and execute with greater precision and resilience. Think: one agent for retrieving data, another for reasoning, and another for interfacing with APIs. You’ll get more modular, interpretable, and maintainable systems. The key shift is seeing agents as composable building blocks for adaptive, domain-specific AI systems aligned to business goals.

Single-agent LLM apps often hit brittle limits like bloated prompts, unclear state, and unscalable logic. In contrast, multi-agent systems can provide flexibility, fault-tolerance, and role clarity, especially in enterprise environments with complex workflows. It’s a step toward turning GenAI into an operational asset with strategy-aligned outcomes.

Multi-agent systems are becoming the foundation for scalable, AI-native operations, and they’re closer to production-ready than you might think.

What Actually Makes the Difference Between Good and Great Data Teams?

Good data teams have baseline skills like pipeline development, dashboarding, and cloud fluency matter. Great teams, however, consistently show deeper strengths, such as clear ownership, strong stakeholder alignment, data reliability, and a habit of saying no to low-leverage work. Crucially, great teams focus on business impact over busywork, avoid overengineering, and treat internal communication as a core competency instead of a soft skill.

There is a difference between teams who ship data products and those who ship business outcomes. Great teams build trust, set expectations, and measure success by how data changes decisions. It’s a mindset and maturity shift that keeps getting misdiagnosed as a hiring problem.

If your data team feels busy but not impactful, it might be time to level up the fundamentals. Technical skill gets you in the door, but strategic alignment and stakeholder trust are what make you great.

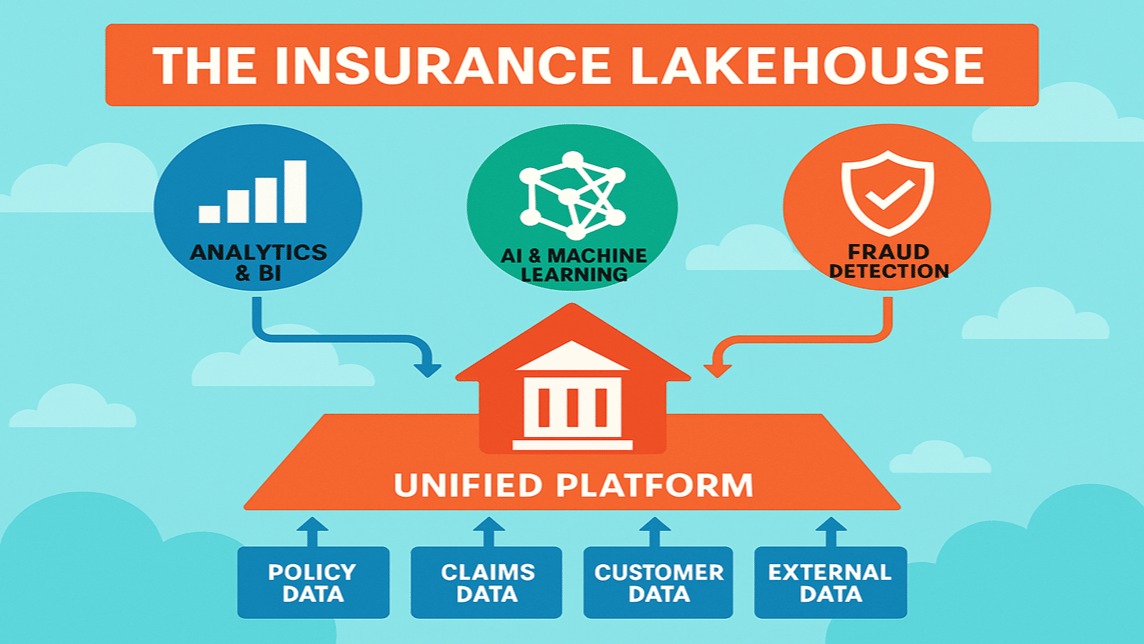

From Silos to Intelligence: Why the Data Lakehouse Is AI’s Missing Link in Insurance

The data lakehouse is emerging as the architectural key to unlocking AI in the insurance industry. Many insurers still operate in siloed environments, with fragmented data across underwriting, claims, and customer engagement systems. This results in incomplete insights and brittle AI use cases. The lakehouse model combines the scalability of data lakes with the structure and governance of warehouses, which enables a unified, governed, and analytics-ready platform that AI can reliably build on.

Insurance companies aren’t short on data. They’re short on usable, connected, and explainable data. AI pilots fall flat because the foundational architecture couldn’t support context-rich modeling or agile experimentation. The lakehouse is an efficiency play, and it’s a strategic enabler for everything from personalized pricing to fraud detection to claims automation.

AI in insurance won’t scale on siloed data. If your models don’t have context, your insights won’t have impact.

How to Scale AI Search to 10M+ Queries Without Breaking Everything

There are five proven techniques for scaling AI-powered search systems to handle 10 million+ queries efficiently, covering both architectural strategies and practical engineering tradeoffs. From index sharding and vector compression, to query caching, load balancing, and hybrid retrieval pipelines, this post offers a clear, hands-on guide to delivering low-latency, high-accuracy responses at scale. It also addresses the hidden costs (like embedding bloat, compute load, and retrieval precision) that start to matter when you move from prototype to production.

Search is often the first real-world application of GenAI inside a company, whether for internal knowledge bases, customer support, or product discovery. Without the right infra, most teams hit a wall fast. Promising RAG pilots will buckle under production traffic because they weren't built with scale in mind.

If your search system is great in dev but choking in prod, you’re not alone. Scaling to 10M+ queries needs bigger infra but it also needs smarter design.

Blog Spotlight: Llama 2: Generating Human Language With High Coherence

Meta’s LLama 2 shows that open models are rapidly closing the gap with proprietary systems in terms of coherence, reasoning, and usability, while offering far more control and transparency. The blog post breaks down how LLama 2 works, its strengths in instruction tuning and modularity, and how it could impact custom LLM deployments, fine-tuning workflows, and cost-effective GenAI stacks. If you're curious about the future of open-source AI, this is a great place to start.

“The greatest danger of AI is that people conclude too early that they understand it.”