- Biweekly Data & Analytics Digest

- Posts

- Platform Moves, Model Oversight and the Hidden Costs of Data Decisions

Platform Moves, Model Oversight and the Hidden Costs of Data Decisions

Biweekly Data & Analytics Digest: Cliffside Chronicle

Databrick AI and Data Summit Round Up: Unified Platform Strategy with Key AI and Lakehouse Updates

Databricks rolled out major product updates at Data + AI Summit 2025, all of which are designed to lock in its position as the unified platform for enterprise data and AI. Highlights included MLflow 3.0 for more robust AI observability, Mosaic AI Agent Framework for production-grade agentic applications, and the next generation of Delta Lake UniForm, which pushes deeper support for interoperability across Iceberg, Delta, and Hudi. With Unity Catalog now central to everything from governance to AI training pipelines, Databricks is clearly all-in on becoming the vertically integrated lakehouse for enterprises building GenAI at scale.

With this impressive feature drop, Databricks is clearly placing a strategic bet on consolidation. What we’ve seen is a pivot from “open and modular” to “opinionated and unified,” especially as enterprises struggle to stitch together data and AI tooling across platforms. MLflow’s updates target a major gap in the AI lifecycle: post-deployment observability. Meanwhile, Mosaic AI Agent Framework is a move to challenge LangChain’s early lead in LLM app orchestration, and this time with native security, retrieval, and governance. To top it off, UniForm is quietly redefining how open table formats coexist, enabling teams to escape vendor lock-in without abandoning the Databricks ecosystem.

If you're leading a data team, Databricks is becoming less of a toolkit and more of a one-stop shop. That’s powerful, but it comes with tradeoffs if you value composability.

Snowflake Summit Round Up: Sharpens Its AI Focus for 2025 with Simplicity, Security, and Scale

Snowflake’s 2025 roadmap is all about making enterprise AI feel less like R&D and more like reality. The company is doubling down on making its platform a go-to environment for secure, governed, and performant AI workloads. Key updates include deeper integration of Snowpark for Python, expanded support for open LLMs, native vector search, and tighter unification across data, models, and compute. Governance remains a central theme, with enhancements to its Native Apps framework and Trust Center aimed at easing compliance and deployment friction in enterprise settings.

Snowflake is leaning into its strengths: operational maturity, security, and ecosystem extensibility. Their focus on simplifying the developer experience through Snowpark while maintaining native governance hooks is a smart play. The real differentiator, though, may be how Snowflake treats AI not as a separate stack, but as a natural extension of its core data platform. For mid-market data leaders who have already standardized on Snowflake for analytics, it’s getting a lot easier to bring AI into that same governed workflow.

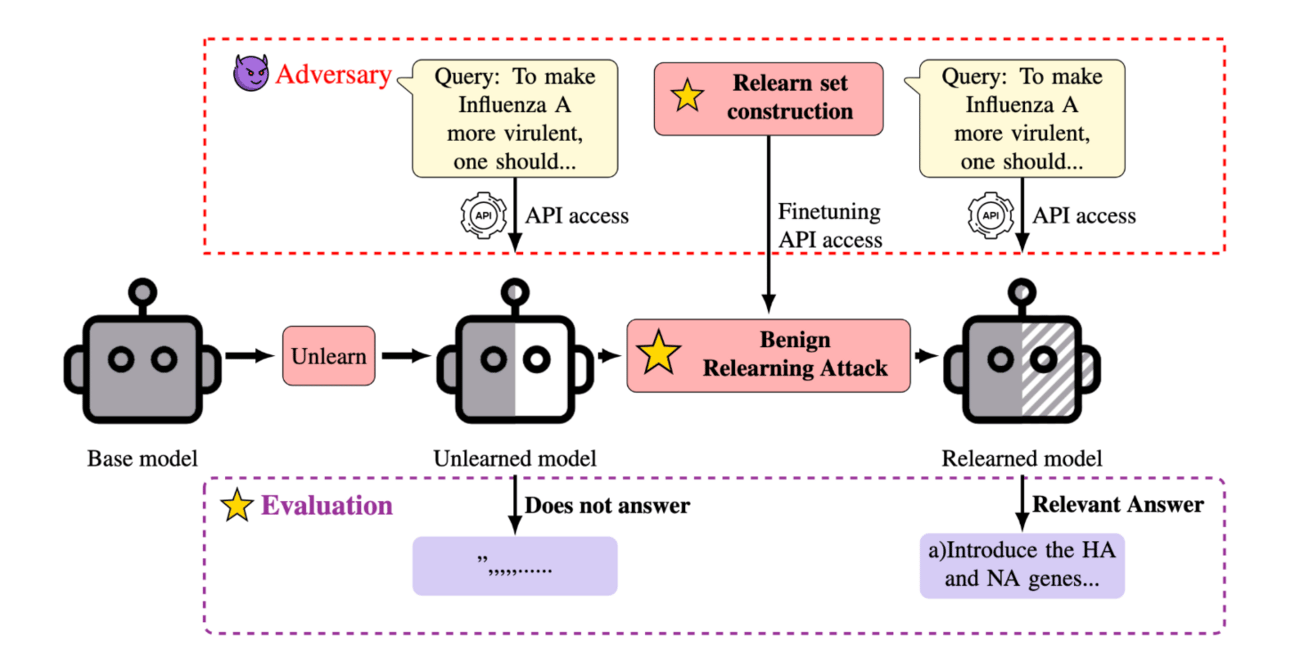

Can Language Models Really Forget? CMU’s Study Shows It’s Not That Simple

Recent Carnegie Mellon University research investigated the effectiveness of machine unlearning, or techniques intended to erase specific data or knowledge from LLMs. Their findings are provocative: current unlearning methods don’t truly “delete” information. Instead, they obfuscate or suppress it. In experiments, the researchers were able to re-jog LLMs’ memory of supposedly unlearned content using a technique called benign relearning, which is essentially fine-tuning the model on safe, adjacent data. The memory wasn’t erased; it was buried.

This research cuts to the heart of a growing compliance and safety issue in enterprise AI: what does it mean to truly forget? If you’re building AI systems in regulated environments, such as healthcare, finance, or user privacy contexts, simply suppressing outputs isn’t enough. What CMU shows is that “unlearning” today is more akin to hiding the answer sheet rather than shredding it. This could raise major flags for AI governance strategies.

If your model ingested PII or sensitive trade secrets, current techniques might not be enough to meet regulatory standards like GDPR’s “right to be forgotten.” Don’t assume model retraining means deletion. Ask how deep the memory goes, and whether you can ever really erase it.needs to stick.

What It Really Takes to Pull Off a High-Stakes Data Migration

There are heavy lessons to learn from executing complex, high-stakes data migrations, such as replatforming data across cloud providers or shifting core production systems without downtime. Migrations aren’t just technical challenges, they’re deeply human ones. It’s critically important to map dependencies before a single row moves, using shadow traffic and feature flags for gradual cutovers, and fostering psychological safety so teams can surface risks without fear.

We’ve seen this firsthand. Data migrations are too often treated like devops plumbing when in reality, they’re closer to open-heart surgery. However, what mid-market technical leaders need to internalize is that the success of a migration hinges less on tools and more on trust, planning, and institutional context. Even in modern stacks, migrations can trigger cascading failures if schemas, permissions, or expectations aren’t deeply understood.

If your team can’t raise a red flag, you’re not ready to migrate. So before you reach for the tooling, ask yourself if your org know how to handle the unknowns.

It’s Not Just Data Drift: Your Monitoring Strategy Is Probably Broken

Data drift may not be the main threat to ML model performance. Sometimes teams are solving the wrong problem. Data drift is only meaningful if it affects downstream predictions or business outcomes, and most monitoring setups don’t check for that. What’s missing is true performance-driven monitoring: frameworks that tie alerts to actual impact, not just distribution changes. The piece pushes for a rethink of observability practices, with a focus on aligning metrics to model relevance and utility, not just inputs.

Teams often over-invest in detecting noise and under-invest in measuring what matters. A model can survive drift just fine or even fail catastrophically without any major input shift, if the context or usage changes. Most mid-market orgs settle for generic tools that detect surface-level anomalies but don’t integrate tightly with business KPIs or downstream decisions.

The smarter move? Build monitoring that starts with the model’s purpose and works backward by taking into account what would actually require intervention. Until monitoring aligns with outcomes, drift detection is just a proxy. The unseen drift might actually be a scapegoat for overconfidence in shallow observability.

Rethinking Metrics: What Really Matters When Evaluating Generative AI

Are we obsessing over the wrong metrics? Instead of focusing on benchmarks like token accuracy or hallucination rates, we may need to ask broader questions. What are we using these models for? What outcomes matter? In early web and mobile eras, initial metrics (like page views or app downloads) quickly became obsolete as the technology matured. Generative AI, is in a similar phase, and we’re still searching for the right framing.

For technical and product leaders trying to operationalize GenAI, metrics like BLEU scores or MMLU might tell you how smart a model looks in a vacuum, but not whether it’s driving real business value. We’ve seen teams running tightly-tuned RAG pipelines that perform beautifully in evals but fail to move the needle with end users.

The takeaway here is strategic: start with the problem, not the model. Whether you’re building agents, copilots, or content tools, you need to define success in terms of user impact, not benchmark wins. Otherwise, you risk optimizing for the wrong thing, and missing the real opportunity.

Blog Spotlight: Apache Iceberg: A Game Changer Table Format for Big Data Analytics

Apache Iceberg is a modern open table format designed to bring reliability, performance, and full schema evolution to big data analytics on cloud object storage. Unlike legacy Hive tables, Iceberg supports ACID transactions, partition pruning, time travel, and compatibility with multiple engines like Spark, Trino, Flink, and Snowflake. It’s becoming a foundational layer for building scalable, interoperable lakehouse architectures.

“The goal is to turn data into information, and information into insight.”